Sheet Music Generation

Yuang Yuan, Haoran Yin, Pim Bax

Audio Separation

Audio separation is the first step of Sheet Music Generation in which the raw audio will be separated into different tracks including vocal, paino, guitar and so on. We can then use the separated audios to generate MIDI files.

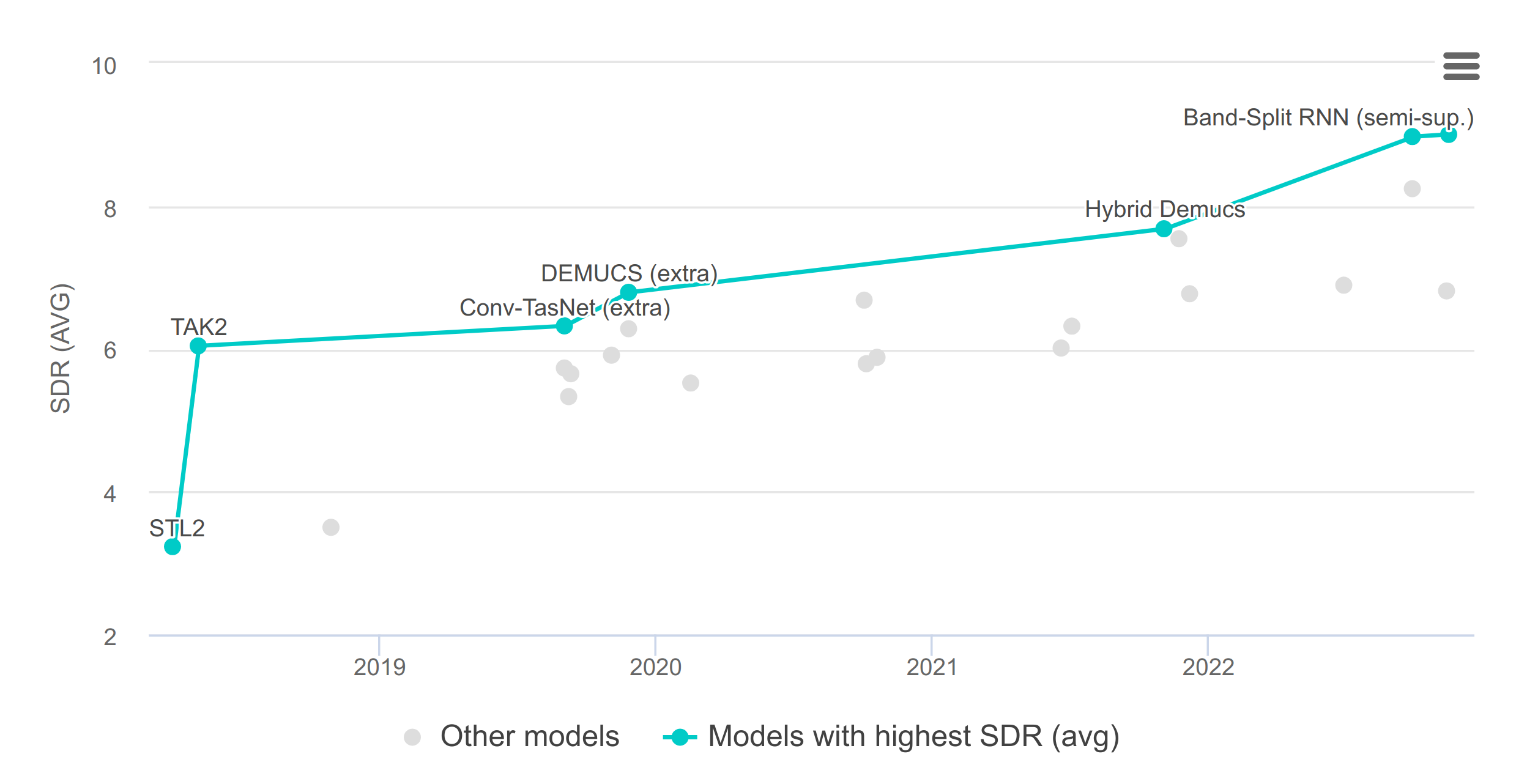

Our audio source separation method is based on Demucs[1], a hybrid transform-based model, proposed by Facebook AI Research. See below the example audio separation results:

Raw Audio (from MUSDB18):

Separated Track(vocals):

Separated Track(drums):

Separated Track(bass):

Separated Track(other):

Audio to MIDI

MIDI (Musical Instrument Digital Interface) was proposed in the early 1980s to solve the communication problem between electro-acoustic instruments. MIDI is the most extensive music standard format in the arranger world, and it can be called "music notation that computers can understand." It uses digital control signals of notes to record music [2].

Considering the importance of this part, we chose a novel research, Basic Pitch, as the basis of our work [3]. This research was released in conjunction with Spotify's publication at ICASSP 2022. It introduces a lightweight yet powerful Automatic Music Transcription (AMT) model. To train our model, we use three datasets, MTG-QBH, MAESTRO, and MUSDB18. Here are some exciting transcription results for different instruments (including vocals).

Vocals (from MUSDB18):

MIDI Transcription:

Piano (from MTG-QBH):

Guitar (from MAESTRO):

MIDI Transcription:

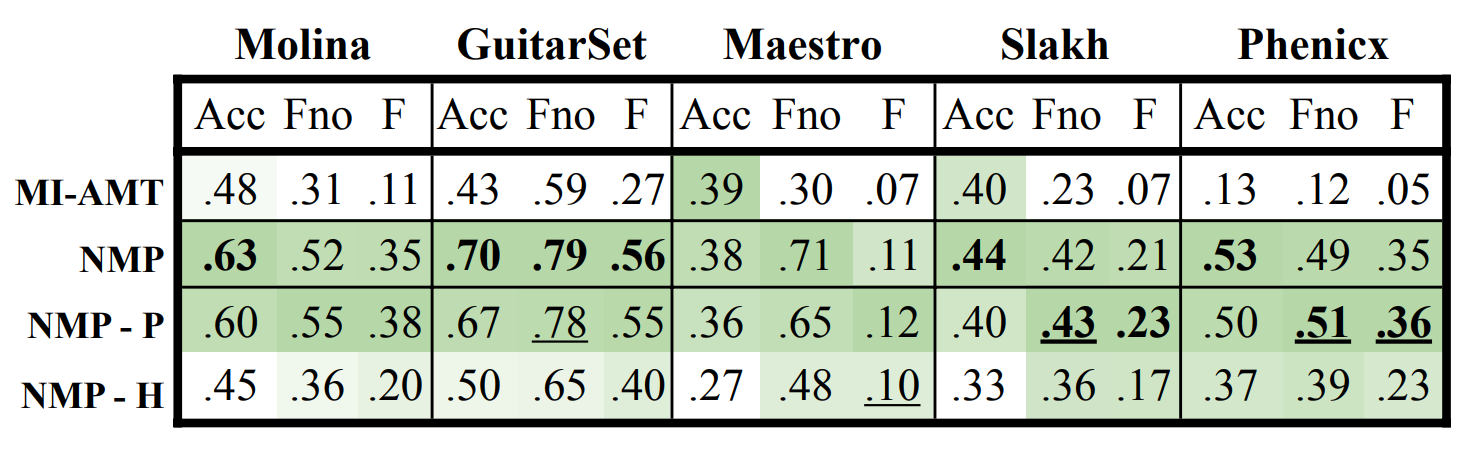

Figure , Comparison of Basic Pitch and a recent, strong baseline model, MI-AMT [4]. It uses a U-Net architecture with an attention mechanism and outputs a note-activation posteriorgram with a total of over 20M parameters, trained on MAESTRO and MusicNet. Average note event metrics on all test datasets for the baseline algorithm, proposed method, and ablation experiments. The best score for each column is in bold. The shade of green indicates how far a score is from the best score, with the worst scores in white. All non-underlined results are statistically significantly different with p < 0.05 compared with NMP (permetric/dataset) via a paired t-test.

MIDI to Note Sheet

To generate the sheet music, we used the Mingus library in Python to read the midi files. This library is able to convert the midi files to an internal representation, which can then be converted to a so-called 'LilyPad' string. This string will then be used by LilyPad, another library, to generate a PDF of the sheet music.

When trying to read the midi files, we ran into some issues of Mingus not being able to interpret the specific midi format as generated in the earlier steps. This means that we first had to make some changes to the midi output of our previous steps, before we were able to interpret these midi files.

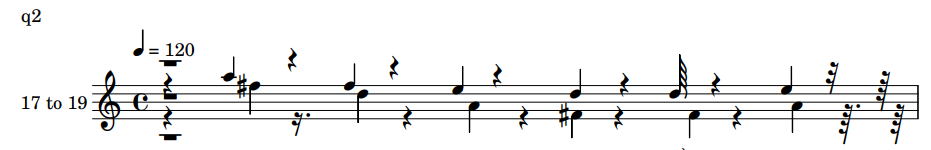

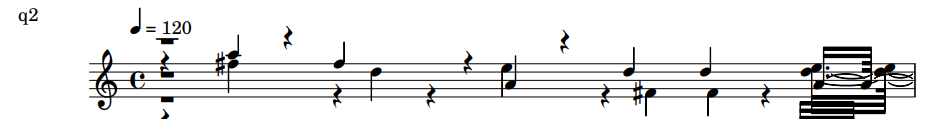

Here are the sheets for 2 audio clips from last section, represents Piano and MIDI Transcription respectively.

Sheet of ground truth(Piano)

Sheet of MIDI transcription

Applications

Play sheet

An Android application that plays sheet music. The video shows how it plays the sheet generated by midi file from vocal sample above.

Rhythm Game

Here is a team project from another course this semester, Mutimedia Systems. Contributors: Haoran Yin, Nan Sai, and Yingjie Li.References

- Rothstein, J. (1992). MIDI: A comprehensive introduction (Vol. 7). AR Editions, Inc..

- Bittner, R. M., Bosch, J. J., Rubinstein, D., Meseguer-Brocal, G., & Ewert, S. (2022, May). A Lightweight Instrument-Agnostic Model for Polyphonic Note Transcription and Multipitch Estimation. In ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 781-785). IEEE.

- Wu, Y. T., Chen, B., & Su, L. (2020). Multi-instrument automatic music transcription with self-attention-based instance segmentation. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 28, 2796-2809.

- Défossez, Alexandre, et al. Music source separation in the waveform domain. arXiv preprint arXiv:1911.13254 (2019).